10 August 2025

21:31

I subscribe to Medium (don’t judge), and their weekly summary pushed an article to me entitled The Postgres Revolution Is Here: Why Version 18 Changes Everything for Developers. Well, OK, that sounds relevant to my interests. Let’s check it out!

Oh.

Oh, dear.

Oh, sweet Mother of God.

The author lists 10 new incredible amazing features in PostgreSQL version 18 (which is in beta right now, you should check it out). Of the features he describes:

- Four are described basically correctly, but all of them were introduced in earlier versions of PostgreSQL.

- Two kind of sort of exist, but they were introduced before PostgreSQL v18 and the author gets details wrong (in some cases, completely and totally wrong).

- And four are pure hallucinations. Those features don’t exist, at all, anywhere.

The only explanation is that this article was a cut and paste from an LLM. If you wanted a good example of why using LLMs for technical advice is a bad idea, here you go.

Here are the Amazing New Features the author talks about, and what’s wrong with each of them:

1. MERGE Gets Real: No More Hacky UPSERT Workarounds

Merge has been in PostgreSQL since version 15. There are some incremental improvements in version 18, but they are far from revolutionary.

2. Parallel COPY: Data Ingestion at Warp Speed

There is no such feature in PostgreSQL version 18 (or any other version of PostgreSQL).

3. `JSON_TABLE“: SQL and JSON, Finally in Sync

JSON_TABLE was introduced in PostreSQL version 17.

4. Logical Replication of Schema Changes: The DDL Dream

This feature does not appear in PostgreSQL version 18.

5. Disk I/O Telemetry: pg_stat_io Arrives

pg_stat_io was introduced in PostgreSQL version 16. None of the columns described in the article exist in `pgstatio“.

6. Zstandard Compression: Store More, Pay Less

PostgreSQL introduced zstd compression in PostgreSQL version 15, but not for TOAST tables. pg_dump archives and pg_basebackup backups. PostgreSQL version 18 does not have it for TOAST tables either.

Further, that’s not the right syntax for setting compression for extended objects. PostgreSQL tables don’t have “toast compression”; objects are compressed both in the main table and in TOAST tables. The syntax shown is for setting storage parameters on a table, but there is no storage parameter toast_compression.

7. The Index Advisor: PostgreSQL Gets Smart

Oh for fuck’s sake. There is no such thing as pg_stat_plans in community PostgreSQL. There is a third-party module pg_stat_plans that has not been updated in 12 years. You want index advice, plunk down the money for pg_analyze.

8. IO-Aware Autovacuum: No More Query Spikes

There is no such GUC autovacuum_io_throttle_target in version 18 or any other PostgreSQL version.

9. Per-Column Collation: Globalization Made Simple

This feature has been in PostgreSQL so long it’s not even worth my time to look up when it was introduced. A decade or more, at least.

10. Multirange Queries: Write Less, Query More

Multirange types were introduced in PostgreSQL version 14. That’s not the right syntax for constructing multirange types There’s no bare type multirange: there are specific ones for the types of the bounds of the range, such as int4multirange.

My brain hurts now.

It’s pretty clear where whatever LLM the author used got the information: It digested mailing list posts that asked for or suggested features, blog posts that also got information wrong, and random GitHub repos. LLMs don’t know what is “true,” just what they’ve read.

I am sure it took me much longer to write this than it took the author to write the article. We as an industry (and as a society) are setting ourselves for failure by relying on the intelligence of something that can’t think and can’t check its work.

2 April 2025

09:30

Sometimes, we run into a client who has port 5432 exposed to the public Internet, usually as a convenience measure to allow remote applications to access the database without having to go through an intermediate server appllication.

Do not do this.

This report of a “security issue” in PostgreSQL is alarmist, because it’s a basic brute-force attack on PostgreSQL, attempting to get supueruser credentials. Once it does so, it uses the superuser’s access to the underlying filesystem to drop malware payloads.

There’s nothing special about this. You could do this with password-auth ssh.

But it’s one more reason not to expose PostgreSQL’s port to the public. There are others:

- You open yourself up to a DDOS attack on the database itself. PostgreSQL is not hard to do a DOS attack on, since each incoming connection forks a new process.

- There have been, in the past, bugs in PostgreSQL that could cause data corruption even if the incoming connection was not authenticated.

As good policy:

- Always have PostgreSQL behind a firewall. Ideally, it should have a non-routable private IP address, and only applications that are within your networking infrastructure can get at it.

- Never allow remote logins by superusers.

- Make sure your access controls (

pg_hba.conf, AWS security groups, etc.) are locked down to the minimum level of access required.

28 January 2025

08:16

PostgreSQL version 12 introduced a new option on the VACUUM command, INDEX_CLEANUP. You should (almost) never use it.

First, a quick review of how vacuuming works on PostgreSQL. The primary task of vacuuming is to find dead tuples (tuples that still exist on disk but can’t ever be visible to any transaction anymore), and reclaim them as free space. At a high level, vacuuming proceeds as:

- Find a big batch of dead tuples (how big is “big” is a function of

maintenance_work_mem, or autovacuum_work_mem for autovacuum).

- Remove that batch of dead tuples from all indexes.

- Remove them from the heap (the main table).

- Cycle until the whole table has been scanned.

Most of the time in vacuuming is spend removing the dead tuples from the indexes. It has to do this first, because otherwise, you would have tuple references in the indexes that are now invalid… or, worse, look like they are valid but point to wrong tuples!

INDEX_CLEANUP OFF reduces the amount of cleanup that vacuuming can do. It does release the space that dead tuples take up, but leaves the line pointers in place (it has to, so that the indexes are still pointing at the right location).

(The documentation does explain this, but uses terminology that can be unfamiliar to a lot of PostgreSQL users.)

Why would you ever use it, then? The other thing that vacuuming does is mark any tuples which can always be visible to any transaction (until modified or deleted) as “frozen.” This is an important operation to prevent data corruption due to PostgreSQL’s 32-bit transaction ID (you can get more information about freezing here).

Sometimes, for one reason or another, PostgreSQL has been unable to completely “freeze” a table, and the database can get dangerously close to the point that the wraparound data corruption can occur. Since vacuuming the indexes takes most of the time, sometimes, you want to tell PostgreSQL to skip that step, and just vacuum the heap so that the wraparound danger has passed. Thus, the INDEX_CLEANUP option.

But you still have to do a regular VACUUM on the table.

It’s not! And the worst possible situation is to do an INDEX_CLEANUP OFF vacuum on cron or something.The indexes will end up very badly bloated, which will hit query performance.

So, unless you are sure you know what you are doing (that is, you are in a wraparound-point emergency), please pretend this option doesn’t exist. Really.

1 December 2024

20:57

I am putting this here to remind myself that unless you actively delete them, .pyc files from .py files that no longer exist can hang out and cause all kinds of weird and exciting problems, and part of your deploys should be to delete all .pyc files.

22 November 2024

00:00

We’ve gone through a lot of detail about locales and collations here, but what should you do when it is time to set up a database? Here is a cookbook with some common scenarios, with recommendations.

“I want maximum speed, I am running on PostgreSQL version 17 or higher, and it’s OK if collation is whacky for non-7-bit-ASCII characters.”

Use the C.UTF-8 local from the built-in locale provider.

CREATE DATABASE mydb locale_provider=builtin builtin_locale='C.UTF8' template=template0;

The built-in C.UTF-8 locale collates text based on the Unicode codepoints. You won’t get broken or invalid characters (as you would with the C/POSIX locale), but sortation will be incorrect for some languages. However, this gets you a good combination of reliable (if sometimes whacky) sort order, and very good performance. You also avoid any issues with underlying locale provider libraries changing.

“I want maximum speed, I am running on a version of PostgreSQL before 17, and I swear that I will never, ever put a character in the DB that is not 7-bit ASCII.”

Then you have my permission to use the C/POSIX locale.

CREATE DATABASE mydb locale='POSIX' template=template0;

C/POSIX locale just uses the C standard library function strcmp, so it does byte by byte comparisons, ignoring encoding entirely. This also avoids issues with locale provider libraries changing. If you do put in non-7-bit-ASCII characters, the sortation will be completely whacky, so don’t do that.

“I’m willing to trade off a little bit of speed so that my collations are reasonable across languages, or I am on a version before 17 and do want to use non-7-bit-ASCII characters.”

Use the ICU und-x-icu locale.

CREATE DATABASE mydb locale_provider=icu icu_locale='und-x-icu' encoding='UTF8' template=template0;

This provides reasonable collation order for most languages, and is (usually) fast compared to libc locales (except POSIX). The icu libraries also can change in a breaking fashion less often than libc, although either one can change in an unfortunate way.

“I need my collation to be spot-on correct for one particular language, but that will be the only language in the database.”

Use either the libc or icu locale specific to your language requirements. Which one you use depends on which one will suit your needs better. Which one is faster depends on the specific collation, and icu has more features for getting very specific with the collation than libc does. If it is a tossup, icu libraries tend to have fewer breaking changes than libc libraries over time.

An example would be:

CREATE DATABASE mydb locale_provider=icu icu_locale='de-AT' encoding='UTF8' template=template0;

or

CREATE DATABASE mydb locale_provider=libc locale='de_AT.UTF-8' template=template0;

“My database will have different languages in it, and I’d like collation to be spot-on correct for those languages.”

Create the database as und-x-icu, and then put each language in its own table, or column within the table, specifying the appropriate collation for each.

“`

CREATE DATABASE mydb localeprovider=icu iculocale=’und-x-icu’ encoding=’UTF8′ template=template0;

CREATE COLLATION de_at (provider=icu, locale=’de-AT’);

CREATE TABLE stuff (deat text collate deat);

“`

Doing useful multi-language full-text search is (way) beyond the scope here.

“I am on PostgreSQL 17 or higher, and I never, ever want to worry about my locale provider library changing on me.”

Use the C.UTF-8 locale from the built-in locale provider.

CREATE DATABASE mydb locale_provider=builtin builtin_locale='C.UTF8' template=template0;

You will get slightly whacky collation for non-7-bit-ASCII characters, but you’ll never have to worry about the locale provider changing in a bad way.

“I am on a version of PostgreSQL earlier than 17, and I never, ever want to worry about my locale provider library changing on me.”

Then you are stuck using C/POSIX locale.

CREATE DATABASE mydb locale='POSIX' template=template0;

The good news is that if strcmp changes in some breaking way, we’ll all have a lot more to worry about than PostgreSQL collation (like Linux not working anymore). Remember that if you have non-7-bit-ASCII characters, they’ll collate in some unfortunate way.

“I’m using either libc or icu, and I am terrified that they will change underneath me and break things.”

The solution here is to:

- Upgrade to PostgreSQL 17.

- Never, ever run a primary and a binary replica on two different versions of the OS.

- When something underlying PostgreSQL changes (new OS, etc.), be sure to respond at once to the WARNING that is generated when a locale provider library changes by doing a cluster-wide REINDEX DATABASE.

“Do I really have to worry about this?”

Sorry, yes, you do. The good news is that you generally only have to worry about it when first setting up the database, or when moving a database to a new OS (or upgrading the OS or packages on an existing server).

And that concludes our look at locales in PostgreSQL! Thanks for reading.

20 November 2024

22:10

In previous installments in this series about locales and collations in PostgreSQL, we’ve made some vague allusions to the speed of the various collation functions. Let’s be a bit more analytical now.

The data here was gathered on a 4GB Linode instance running Ubuntu 24.04 and PostgreSQL 17.1. The test data was 1,000,000 records, each one a string of 64 random 7-bit ASCII characters. For each of the configurations, the test data was loaded into a table:

sql

CREATE TABLE t(v varchar);

And then a simple sort on the data was timed (discarding the first run to control for cold-cache effects, and averaging over five others):

sql

SELECT * FROM t ORDER BY v;

work_mem was set high enough that the sort would be executed in memory, and max_parallel_workers_per_gather was set to 0 to avoid different query plans obscuring the underlying library performance. This is hardly an exhaustive test of performance, but it gives a reasonable idea of the relationship between the various collation methods.

| Locale Provider | Locale | Time |

| --------------- | --------- | ------ |

| builtin | C.UTF8 | 486ms |

| libc | POSIX | 470ms |

| icu | und-x-icu | 765ms |

| icu | de-AT | 770ms |

| libc | de_AT | 3645ms |

Unsurprisingly, the POSIX locale was the fastest. However, the built-in C.UTF8 collation was nearly as fast (really, wiithin the margin of error), and produces reasonable and consistent results, if not exactly correct for many languages.

The other two icu tests used the und-x-icu locale (which provides a reasonable sort order for most languages) and a language-specific collation (German, Austrian region). Their performance was slower than C.UTF8 or POSIX, but it’s unlikely that by itself will cause a significant application performance issue, unless the application spends a lot of time sorting strings.

The libc equivalent to the de-AT icu locale took much longer than icu to complete the sort: almost 5x longer. There’s probably not a good reason to use the language-specific libc locales over the icu locales, especially with a performance different that substantial. icu is also more consistent platform-to-platform than libc.

So, knowing all of this, what should you do? In our last installment, we’ll discuss some different scenarios and what locales to use for them.

15 November 2024

12:31

In this installment of our series on locales and PostgreSQL, we’ll talk about what can happen with the library that provides locales changes out from under a PostgreSQL database. It’s not pretty.

First, more about collations

Back in an earlier installment, we described a collation as a function that takes two strings, and returns if the strings are greater than, equal to, or less than each other:

coll(a, b) -> {greater than, equal, less than}

This is a slight oversimplification. What the actual collation function does is take a string, and returns a sort key:

coll(a) -> Sa

The sort keys have the property that if the strings they are derived from have (within the rules of the collation) a particular relationship, the sort keys do as well. This is very valuable, because it allows doing these comparisons repeatedly without having to rerun the (potentially expensive) collation function.

In the case of C locale, the collation function is the identity function: you compare exactly the same string of bytes that is present in the input. In more sophisticated locales, the collation function “normalizes” the input string into a string that can be repeatedly compared by just comparing the bytes.

What is an index, anyway?

Everyone who works with databases has an intuitive idea of what an index is, but for our purposes, an index is a cache of a partiuclar sortation of the input column. You can think of an index defined as:

sql

CREATE INDEX on t(a) WHERE b = 1;

as a cache of the query predicate:

sql

SELECT ... WHERE b = 1 ORDER BY a;

This allows the database engine to quickly find rows that use that predicate, as well as (often) retrieve a set of rows in sorted order without having to resort them.

The database automatically updates the “cache” when a row changes in a way that would alter the index. This generally works very, very well.

But:

Cache invalidation is hard.

Suppose that the collation function changes, but no one tells the database engine that. Now, the “cache” in the form of the index is invalid, since it holds the sort keys from the old collation function, but any new entries in the index will use the new sortation function.

This has happened.

Version 2.28 of glibc included many signficiant collation changes. There are many situations in which a PostgreSQL database whose indexes where built on an earlier version might find itself running on the newer version:

- Upgrading the OS (for example, on Ubuntu, from a version before 18.10 to that version or later).

- Creating a binary replica on a newer version of the OS with the new glibc.

- Restoring from a PITR backup taken on the older version to a system running the newer version.

The result is suddenly, indexes aren’t working the way they should be: The results from queries on them that use text indexes can be wrong. This is particularly perplexing in the binary replica case, since queries on the system running the older version behave correctly, while ones on the newer versions are incorrect.

ICU has the same problem, although its collation functions change less often, so switching to ICU collations does not completely solve the problem.

Starting with version 10 (for ICU) and version 13 (for libc), PostgreSQL records the current version of the libraries being used as locale providers, and will issue a warning if the current version on the system does not match the version that was used previously:

WARNING: collation "xx-x-icu" has version mismatch

DETAIL: The collation in the database was created using version 1.2.3.4, but the operating system provides version 2.3.4.5.

HINT: Rebuild all objects affected by this collation and run ALTER COLLATION pg_catalog."xx-x-icu" REFRESH VERSION, or build PostgreSQL with the right library version.

This is a very good reason to monitor log output for warnings, since this is the only place that you might see this message.

It’s possible to get false positives here. A change to the library does not necessarily mean that the collation changed. However, it’s always a good idea to be cautious and rebuild objects that depend on changed collations. The PostgreSQL documentation provides instructions on how to do so. The PostgreSQL Wiki also has a good entry about this problem.

In the next installment, we’ll cover a subject near and dear to every database person’s heart: speed.

14 November 2024

17:57

In our this installment about character encodings, locations, and locales in PostgreSQL, we’re talking about locale providers.

A reminder about locales

A “locale” is a bundled combination of data structures and code that provides support services for handling different localization services. For our purposes, the two most important things that a locale provides are:

- A character encoding.

- A collation.

And as a quick reminder, a character encoding is a mapping between a sequence of one or more bytes and a “glyph” (what we generally think of as a charcter, like A or * or Å or 🤷). A collation is a function that places strings of characters into a total ordering.

UTF-8

Everything here assumes you are using UTF-8 encoding in your database. If you are not, please review why you should.

Getting locale

A locale provider is a library (in the computing sense, a hunk of code and data) whose API provides a set of locales and lets you use the functions in them. There are three interesting locale providers in the PostgreSQL world:

- libc — This is the implementation of the

C standard library on every POSIX-compatible system, and many others. You’ll most often see glibc, which is the GNU project’s implemenatation of libc.

- ICU — The Inernational Components for Unicode. This is a (very) extensive library for working with Unicode text. Among other features, it has a comprehensive locale system.

- builtin — As of version 17, PostgreSQL has a builtin collation provider, based on locales built into PostgreSQL itself.

libc

When nearly everyone thinks of locales, they think of libc. Really, the mostly think of the ubiquitous en_US.UTF-8 locale. If you do not specify a locale when creating a new PostgreSQL instance using initdb, it uses the system locale, which very often en_US.UTF-8. (Or C locale, which is almost never the right choice.)

The structure of a glibc locale name is (using en_US.UTF-8 as an example):

en — The base language of the locale, usually the ISO 639 code.- US — Is the “region.” The “region” is used if there are subtypes of locales within the language.

US means US (“American”) English.

UTF-8 — Is the character encoding.- There’s also a (rarely seen in practice) optional “modifier” that can be postpended, staring with an

@. For example, @euro means to use the Euro currency symbol. (Not all modifiers apply to all locales.)

The exact set of locales available on a particular system depend on the particular flavor of *NIX system, the distribution, and what packages have been installed. The command locale -a will list what’s currently installed, and locale will show the various settings of the current locale.

Different platforms can have different collations for the same locale when using libc, and the collation rules for a particular locale can change between libc releases. (We’ll talk about what happens here in a future installment.)

ICU

ICU is an extremely powerful system for dealing with text in a wide variety of languages, and has a head-spinning number of options and configurations. The set of possible ICU collations is enormous, and this just scratches the surface.

In PostgreSQL, ICU locales can be used by creating one in the database using the CREATE COLLATION command. An example is:

CREATE COLLATION english (provider = icu, locale = 'en-US');

The basic structure of ICU collation names is simliar to that of libc collations: a language code, followed by an optional region (in ICU vernacular, these two components are a language tag). In addition to the language tag, locale names can take key/value pairs of options, introduced by a -u- extension in the locale name. An example from the PostgreSQL documentation is:

CREATE COLLATION mycollation5 (provider = icu, deterministic = false, locale = 'en-US-u-kn-ks-level2');

Taking the components of the name piece by piece:

en — English.US — United States English.u — Introduces Unicode Consortium-specific options.kn — Sort numbers as a single numeric value rather than as a sequence of digit characters. ‘123’ will sort after 45, for example. There is no “value” part, as kn is a true/false flag.ks-level2 — Sets the “sensitivity” of the collation to level2. You can think of the “level” as how much the collation “cares” about differences between characters. level2 means that the collation doesn’t “care” about upper-case vs lower case characters, and will thus do case-insensitive sortation.

ICU also defines a language code of und meaning (“undefined”). To distinguish libc-style collations from ICU-style locations when only a language tag is given, -x-icu is added to the end of the name.

ICU is a library like libc, and like libc, the collation algorithms can change between releases (although, in practice, this happens less frequently than it does with libc).

builtin

As of PostgreSQL 17, PostgreSQL has a built-in locale provider, using the idiosyncratic name of builtin. The builtin provider, right now, defines exactly two locales:

C — This is identical to the C locale provided by libc, but is implemented within the PostgreSQL core.C.UTF-8 — This sorts based on the numeric Unicode codepoint rather than just treating the text as a sequence of bytes (which ignores encodings). This produces results that, while often not correct for any language except US English (pretty much), are deterministic, and performs very well. Needless to say, this is only available for databases in UTF-8 encoding.

So, why is there a builtin provider at all? Doesn’t libc and ICU cover the space completely. Well, yes, with one very important exception, which we’ll talk about in our next installment.

27 October 2024

03:18

This is the second installment in our discussion of locales, character encodings, and collations in PostgreSQL. In this installment, we’ll talk about character encodings as they relate to PostgreSQL.

A quick reminder!

A character encoding is a mapping between code points (that is, numbers) and glyphs (what us programmers usually call characters). There are lots, and lots, and lots of different character encodings, most of them some superset of good old 7-bit ASCII.

PostgreSQL and Encodings

From the point of view of the computer, a character string is just a sequence of bytes (maybe terminated by a zero byte, maybe with a length). If the only thing PostgreSQL had to do with character strings was store them and return them to the client, it could just ignore that character encodings even exist.

However, databases don’t just store character strings: they also compare them, build indexes on them, change them to upper case, do regex searches on them, and other things that mean they need know what the characters are and how to manipulate them. So, PostgreSQL needs to know what character encoding applies to the text it is storing.

You specify the character encoding for a database when creating the database. (Each database in a PostgreSQL instance can have a different encoding.) That encoding applies to all text stored in the database, and can’t be changed once the database is created: your only option to change encodings is to use pg_dump (or a similar copy-based system, like logical replication) and transfer the data to a new database with the new encoding. This makes the choice of what encoding to pick up front very important.

This is stupid and I don’t want to think about it.

If you search the web, you’ll see a lot of advice that you should just “ignore locales” in PostgreSQL and just use “C” locale. This advice usually dates from around when PostgreSQL first introduced proper locale support, and there was a certain amount of culture shock in having to deal with these issues.

This is terrible advice. If you are processing human-readable text, you need to think about these topics. Buckle up.

The good news is that there is a correct answer to the question, “What encoding should I use?”

Use UTF-8

The character encoding decision is an easy one: Use UTF-8. Although one can argue endlessly over whether or not Unicode is the “perfect” way of encoding characters, we almost certainly will not get a better one in our lifetime, and it has become the de facto (and in many cases, such as JSON, the de jure) standard for text on modern systems.

The only exception is that if you are building a highly specialized database that will only ever accept text in a particular encoding. The chance of this is extremely small, an even then, there is a strong argument for converting the text to UTF-8 instead.

But what about C encoding?

There is a downside to using UTF-8: It’s slower than basic “C” encoding. (“C” encoding, as a reminder, just takes the binary strings as it finds them and compares them that way, without regard to what natural language code points they represent.) The difference is significant: on a quick test of sorting 1,000,000 strings, each 64 characters and ASCII-only, UTF-8 encoding with the en_UTF8 collation was almost 18x times slower than C encoding and collation.

There are, however, some significant problems:

The first time someone stores any character that it’s 7-bit ASCII, the probability for mayhem goes up considerably, and it’s very hard to fix this once it happens.

You will get sortation in a way that doesn’t match any natural language (except maybe US English), and can be very surprising to a human reading the output.

Unless sorting a large number of character strings on a regular basis is a bottleneck, or you know that you will never have strings that correspond to natural language, the performance improvement is not going to be worth the inevitable issues. Just use UTF-8 coding.

(There are some reasonable alternatives we will discuss later, with their own set of trade-offs.)

Coming up next…

Having decided that we are going to use UTF-8 as the character encoding, which collation should we use? PostgreSQL has an embarrassingly large number of options here, and version 17 introduced some new ones!

25 October 2024

03:50

If you are not familiar with the quote.

This is part one of a series on PostgreSQL and collations, and how to use them without tears. This is an introduction to the general concepts of glyphs, character encodings, collations, and locales.

Glyphs

There is (as always in things involving real human behavior) some controversy over what is a gylph and what isn’t, but as a first approximation, we can use this definition:

These are all glyphs:

A z 4 ü

Symbols are also glyphs:

^ @ ! ‰

Some things are both:

∏

Capital π is both a mathematic symbol and a letter of the Greek alphabet. Of course, most Greek letters are also mathematical symbols.

Glyphs also include characters from other languages that are not alphabetic:

這些都是繁體中文的字形

ᎣᏍᏓ ᏑᎾᎴᎢ

And glyphs include symbols that aren’t traditional language components at all:

🏈 🐓 🔠 🛑

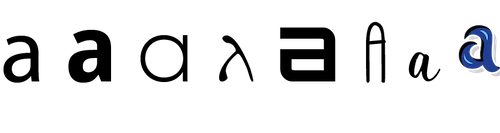

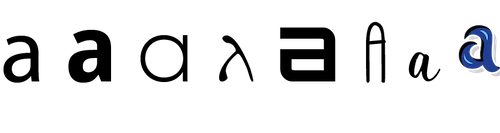

Strictly speaking, there is a distinction between a “glyph” and a “grapheme.” The “glyph” is the mark itself, which can be different depending on typeface, weight, etc. The “grapheme” is the underlying functional unit that the glyph represents. In this definition, these are all the same “grapheme” (lower-case Latin a) but different glyphs:

However, everything involving characters in computing calls the fundamental units “glyphs” rather than “graphemes,” so that’s the term you’ll see here.

A glyph corresponds to what most programmers intuitively think of as a character, and the terms are more or less interchangeable, with some exceptions that we’ll talk about in future articles.

Character Encodings

We need computers to be able to understand glyphs. That means we need to turn glyphs into numbers. (A number that represents a glyph is that glyph’s code point.)

For that, we have a character encoding. A character encoding is a bidrectional mapping between a set of glyphs and a set of numbers. For programmers, probably the most familiar character encoding is ASCII, which maps a (very) limited set of 95 glyphs to 7-bit numbers. (There are encodings even older than ASCII.) Some greatest ASCII hits include:

A ↔ 65 (0x41)

a ↔ 97 (0x61)

* ↔ 42 (0x2A)

ASCII has been around for a long time (it achieved its modern form in 1967). It has some nice features: if an ASCII code represents an upper-case letter, it can be turned into the matching lower-case letter with code + 0x20, and back to upper-case with code - 0x20. If an ASCII code is a numeric glyph, it can be turned into the actual numeric equivalent with code - 0x30.

(You’ll sometimes hear the phrase “7-bit ASCII.” This is, strictly speaking, redundant. ASCII doesn’t define any code points above 127. Everything that uses the range 128-255 is an extension to ASCII. “7-bit ASCII” is useful, though, when you want to specify “ASCII with no extensions.”)

ASCII has huge limitations: It was designed to represent the characters that appeared on a typical English-language computer terminal in 1967, which means it lacks glyphs for the vast majority of languages in the world (even English! ï, for example, is very commonly used in English, as is é).

It became instantly obvious that ASCII wasn’t going to cut it as a worldwide character encoding. Diffferent languages started developing their own character encodings, some based on ASCII codes greater than 127, some on newly-invented character encodings that took more than a single 8-bit byte to represent. Chaos reigned.

Unicode

In an attempt to bring some kind of order to character encodings, an effort began in the late 1980s to create a universal encoding that would include all glyphs from all languages. This was just as ambitious as it sounds! In 1991, the Unicode Consortium was formed, and the first version of Unicode was published in October 1991.

Unicode code points are 32 bit numbers, organized into “code planes.” The “Basic Multilingual Plane,” which contains most glyphs for most living languages, has the top 16 bits equal to zero, but Unicode code points can be, and often are in real life, greater than 65,535.

So, is Unicode a “character encoding”? Well, yes and no. It maps glyphs to code points and back again, so it qualifies as a character encoding in that sense. But Unicode deliberately does not specify how the particular code points are to be stored inside the computer.

To find out how the code points are actually stored inside the computer, we need to talk about Unicode Transformation Formats.

UTF-8, UTF-16, etc.

A Unicode Transformation Format (UTF) is a way of encoding a 32-bit Unicode code point. (Yes, we’re encoding an encoding. Computers are great!)

The simplest UTF is UTF-32: we just take four bytes for each Unicode code point, and that’s that. It’s simple and doesn’t require anything special to decode (well, almost), but it means we are taking four bytes for every glyph. Given that a large percentage of the time, computers are dealing with ASCII characters, we’ve just made our character strings four times larger. No one wants that.

So, UTF-8 and UTF-16 were invented, and were one of the cleverest inventions ever. The rules for both are:

- Consider the Unicode code point as a 32 bit number.

- If the upper 25 bits (for UTF-8) or 17 bits (for UTF-16) are zero, the encoding is just the lower 7 bits (for UTF-8) or 15 bits (for UTF), encoding as one or two 8-bit bytes.

- If any of the upper 25/17 bits are not zero, the code point is broken into multiple 1-byte (UTF-8) or 2-byte (UTF-16) sequences based on some clever rules,

One of the very nice features of Unicode is that any code point where the upper 25 bits are zero is exactly the same as the ASCII code point for the same glyph, so text encoded in ASCII is also encoded in UTF-8 with no other processing.

UTF-8 is the overwhelming favorite for encoding Unicode.

One downside of UTF-8 is that a “character” in the traditional sense is no longer fixed length, so you can’t just count bytes to tell how many characters are in a string. Programming languages have struggled with this for years, although the situation seems to be finally calming down.

In order to process UTF-8 property, the code must know that it’s getting UTF-8. If you have ever seen ugly things like ‚Äù on a web page where a “ should be, you’ve seen what happens when UTF-8 isn’t interpreted properly.

Collations

Computers have to compare character strings. From this really simple requirement, no end of pain has flowed.

A collation is just a function that takes two strings (that is, ordered sequences of glyphs represented as code points), and says whether or not they are less than, equal to, or greater than each other:

f(string1, string2) → (<, =, >)

The problem, as always, is human beings. Different languages have different rules for what order strings should appear in, even if exactly the same glyphs are used. Although there have been some heroic attempts to define collation rules that span languages, languages and they way they are written tend to be very emotional issues tied up with feelings of culture and national pride (“a language is a dialect with an army and a navy”).

There are a lot of collations. Collations by their nature are associated with a particular character encoding, but one character encoding can have many different collations. For example, just on my laptop, UTF-8 has 53 different collations.

Locale

On POSIX-compatible systems (which include Linux and *BSD), collations are one part of a bundle called a locale. A locale includes several different utility functions; they typically are:

- Number format setting

- Character encoding

- Some related utility functions (such as how to convert between cases in the encoding).

- Date-time format setting

- The string collation to use

- Currency format setting

- Paper size setting

Locales on POSIX systems have names that look like:

fr_BE.UTF-8

fr defines the langauge (French), BE defines the “territory” (Belgium, so we are talking about Belgian French), and UTF-8 is the character encoding. From this, we can determine that the character strings are encoded as UTF-8, and the collation rules are the rules for Belgian French.

If there’s only one character encoding available for a particular combination of language and territory, you’ll sometimes see the locale written without the encoding, like fr_BE.

There is one locale (with two different names) that is a big exception to the rules, and an important one:

C or POSIX

The C locale (also called POSIX) uses rules defined by the C/C++ language standard. This means that the system doesn’t have an opinion about what encoding the strings are in, and it’s up to the programmer to keep track of them. (This is really true in any locale, but in the C locale, the system doesn’t even provide a hint as to what encoding to use or expect.) The collation function just compares strings byte-wise; this works great on ASCII, and is meaningless (even for equality!) on UTF-8 or UTF-16. For functions, like case conversion, that need to know a character encoding, the C locale uses the rules for ASCII, which are completely and utterly wrong for most other encodings.

PostgreSQL also provides a C.UTF-8 locale, which is a special case on a special case. We’ll talk about that in a future installment.

Locale Provider

Locales are really just bundles of code and data, so they need to come from somewhere so the system can use them. The code libraries that contain locales are locale providers. There are two important ones (plus one other that’s relevant to PostgreSQL only).

(g)libc

libc is the library that implements the standard C library (and a lot of other stuff) on POSIX systems. It’s where 99% of programs on POSIX systems get locale information from. Different distributions of Linux and *BSD systems have different sets of locales that are provided as part of the base system, and others than can be installed in optional packages. You’ll usually see libc as glibc, which is the Free Software Foundation’s GNU Project’s version of libc.

ICU

The International Components for Unicode is a set of libraries from the Unicode Consortium to provide utilties for handling Unicode. ICU includes a large number of functions, including a large set of locales. One of the most important tools provided by ICU is an implementation of the Unicode Collation Algorithm, which provides a basic collation algorithm usable across multple languages.

(Full disclosure: The work that ultimately became the ICU was started at Taligent, where I was the networking architect. It’s without a doubt the most useful thing Taligent ever produced.)

The PostgreSQL Built-In Provider

In version 17, PostgreSQL introduced a built-in locale provider. We’ll talk about that in a future installment.

What’s Next?

In the next installment, we’ll talk about how PostgreSQL uses all of this, and why it has resulted in some really terrible situations.