03:50

“Gentlemen, this is a 🏈”: Glyphs, Encodings, Collations, and Locales

25 October 2024

If you are not familiar with the quote.

This is part one of a series on PostgreSQL and collations, and how to use them without tears. This is an introduction to the general concepts of glyphs, character encodings, collations, and locales.

Glyphs

There is (as always in things involving real human behavior) some controversy over what is a gylph and what isn’t, but as a first approximation, we can use this definition:

These are all glyphs:

A z 4 ü

Symbols are also glyphs:

^ @ ! ‰

Some things are both:

∏

Capital π is both a mathematic symbol and a letter of the Greek alphabet. Of course, most Greek letters are also mathematical symbols.

Glyphs also include characters from other languages that are not alphabetic:

這些都是繁體中文的字形

ᎣᏍᏓ ᏑᎾᎴᎢ

And glyphs include symbols that aren’t traditional language components at all:

🏈 🐓 🔠 🛑

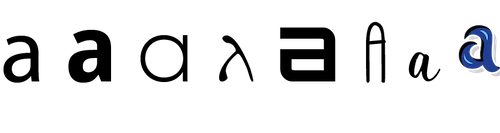

Strictly speaking, there is a distinction between a “glyph” and a “grapheme.” The “glyph” is the mark itself, which can be different depending on typeface, weight, etc. The “grapheme” is the underlying functional unit that the glyph represents. In this definition, these are all the same “grapheme” (lower-case Latin a) but different glyphs:

However, everything involving characters in computing calls the fundamental units “glyphs” rather than “graphemes,” so that’s the term you’ll see here.

A glyph corresponds to what most programmers intuitively think of as a character, and the terms are more or less interchangeable, with some exceptions that we’ll talk about in future articles.

Character Encodings

We need computers to be able to understand glyphs. That means we need to turn glyphs into numbers. (A number that represents a glyph is that glyph’s code point.)

For that, we have a character encoding. A character encoding is a bidrectional mapping between a set of glyphs and a set of numbers. For programmers, probably the most familiar character encoding is ASCII, which maps a (very) limited set of 95 glyphs to 7-bit numbers. (There are encodings even older than ASCII.) Some greatest ASCII hits include:

A ↔ 65 (0x41)

a ↔ 97 (0x61)

* ↔ 42 (0x2A)

ASCII has been around for a long time (it achieved its modern form in 1967). It has some nice features: if an ASCII code represents an upper-case letter, it can be turned into the matching lower-case letter with code + 0x20, and back to upper-case with code - 0x20. If an ASCII code is a numeric glyph, it can be turned into the actual numeric equivalent with code - 0x30.

(You’ll sometimes hear the phrase “7-bit ASCII.” This is, strictly speaking, redundant. ASCII doesn’t define any code points above 127. Everything that uses the range 128-255 is an extension to ASCII. “7-bit ASCII” is useful, though, when you want to specify “ASCII with no extensions.”)

ASCII has huge limitations: It was designed to represent the characters that appeared on a typical English-language computer terminal in 1967, which means it lacks glyphs for the vast majority of languages in the world (even English! ï, for example, is very commonly used in English, as is é).

It became instantly obvious that ASCII wasn’t going to cut it as a worldwide character encoding. Diffferent languages started developing their own character encodings, some based on ASCII codes greater than 127, some on newly-invented character encodings that took more than a single 8-bit byte to represent. Chaos reigned.

Unicode

In an attempt to bring some kind of order to character encodings, an effort began in the late 1980s to create a universal encoding that would include all glyphs from all languages. This was just as ambitious as it sounds! In 1991, the Unicode Consortium was formed, and the first version of Unicode was published in October 1991.

Unicode code points are 32 bit numbers, organized into “code planes.” The “Basic Multilingual Plane,” which contains most glyphs for most living languages, has the top 16 bits equal to zero, but Unicode code points can be, and often are in real life, greater than 65,535.

So, is Unicode a “character encoding”? Well, yes and no. It maps glyphs to code points and back again, so it qualifies as a character encoding in that sense. But Unicode deliberately does not specify how the particular code points are to be stored inside the computer.

To find out how the code points are actually stored inside the computer, we need to talk about Unicode Transformation Formats.

UTF-8, UTF-16, etc.

A Unicode Transformation Format (UTF) is a way of encoding a 32-bit Unicode code point. (Yes, we’re encoding an encoding. Computers are great!)

The simplest UTF is UTF-32: we just take four bytes for each Unicode code point, and that’s that. It’s simple and doesn’t require anything special to decode (well, almost), but it means we are taking four bytes for every glyph. Given that a large percentage of the time, computers are dealing with ASCII characters, we’ve just made our character strings four times larger. No one wants that.

So, UTF-8 and UTF-16 were invented, and were one of the cleverest inventions ever. The rules for both are:

- Consider the Unicode code point as a 32 bit number.

- If the upper 25 bits (for UTF-8) or 17 bits (for UTF-16) are zero, the encoding is just the lower 7 bits (for UTF-8) or 15 bits (for UTF), encoding as one or two 8-bit bytes.

- If any of the upper 25/17 bits are not zero, the code point is broken into multiple 1-byte (UTF-8) or 2-byte (UTF-16) sequences based on some clever rules,

One of the very nice features of Unicode is that any code point where the upper 25 bits are zero is exactly the same as the ASCII code point for the same glyph, so text encoded in ASCII is also encoded in UTF-8 with no other processing.

UTF-8 is the overwhelming favorite for encoding Unicode.

One downside of UTF-8 is that a “character” in the traditional sense is no longer fixed length, so you can’t just count bytes to tell how many characters are in a string. Programming languages have struggled with this for years, although the situation seems to be finally calming down.

In order to process UTF-8 property, the code must know that it’s getting UTF-8. If you have ever seen ugly things like ‚Äù on a web page where a “ should be, you’ve seen what happens when UTF-8 isn’t interpreted properly.

Collations

Computers have to compare character strings. From this really simple requirement, no end of pain has flowed.

A collation is just a function that takes two strings (that is, ordered sequences of glyphs represented as code points), and says whether or not they are less than, equal to, or greater than each other:

f(string1, string2) → (<, =, >)

The problem, as always, is human beings. Different languages have different rules for what order strings should appear in, even if exactly the same glyphs are used. Although there have been some heroic attempts to define collation rules that span languages, languages and they way they are written tend to be very emotional issues tied up with feelings of culture and national pride (“a language is a dialect with an army and a navy”).

There are a lot of collations. Collations by their nature are associated with a particular character encoding, but one character encoding can have many different collations. For example, just on my laptop, UTF-8 has 53 different collations.

Locale

On POSIX-compatible systems (which include Linux and *BSD), collations are one part of a bundle called a locale. A locale includes several different utility functions; they typically are:

- Number format setting

- Character encoding

- Some related utility functions (such as how to convert between cases in the encoding).

- Date-time format setting

- The string collation to use

- Currency format setting

- Paper size setting

Locales on POSIX systems have names that look like:

fr_BE.UTF-8

fr defines the langauge (French), BE defines the “territory” (Belgium, so we are talking about Belgian French), and UTF-8 is the character encoding. From this, we can determine that the character strings are encoded as UTF-8, and the collation rules are the rules for Belgian French.

If there’s only one character encoding available for a particular combination of language and territory, you’ll sometimes see the locale written without the encoding, like fr_BE.

There is one locale (with two different names) that is a big exception to the rules, and an important one:

C or POSIX

The C locale (also called POSIX) uses rules defined by the C/C++ language standard. This means that the system doesn’t have an opinion about what encoding the strings are in, and it’s up to the programmer to keep track of them. (This is really true in any locale, but in the C locale, the system doesn’t even provide a hint as to what encoding to use or expect.) The collation function just compares strings byte-wise; this works great on ASCII, and is meaningless (even for equality!) on UTF-8 or UTF-16. For functions, like case conversion, that need to know a character encoding, the C locale uses the rules for ASCII, which are completely and utterly wrong for most other encodings.

PostgreSQL also provides a C.UTF-8 locale, which is a special case on a special case. We’ll talk about that in a future installment.

Locale Provider

Locales are really just bundles of code and data, so they need to come from somewhere so the system can use them. The code libraries that contain locales are locale providers. There are two important ones (plus one other that’s relevant to PostgreSQL only).

(g)libc

libc is the library that implements the standard C library (and a lot of other stuff) on POSIX systems. It’s where 99% of programs on POSIX systems get locale information from. Different distributions of Linux and *BSD systems have different sets of locales that are provided as part of the base system, and others than can be installed in optional packages. You’ll usually see libc as glibc, which is the Free Software Foundation’s GNU Project’s version of libc.

ICU

The International Components for Unicode is a set of libraries from the Unicode Consortium to provide utilties for handling Unicode. ICU includes a large number of functions, including a large set of locales. One of the most important tools provided by ICU is an implementation of the Unicode Collation Algorithm, which provides a basic collation algorithm usable across multple languages.

(Full disclosure: The work that ultimately became the ICU was started at Taligent, where I was the networking architect. It’s without a doubt the most useful thing Taligent ever produced.)

The PostgreSQL Built-In Provider

In version 17, PostgreSQL introduced a built-in locale provider. We’ll talk about that in a future installment.

What’s Next?

In the next installment, we’ll talk about how PostgreSQL uses all of this, and why it has resulted in some really terrible situations.

There is one comment.

Ross Bradbury at 09:39, 26 October 2024:

Great! I’m looking forward to the rest of this series.